The Dawn of the Vera Rubin Superchip: NVIDIA’s Vision for Next-Gen AI Infrastructure

At its recent developer event GTC 2025, NVIDIA took the wraps off what many consider a defining moment for the future of artificial intelligence infrastructure: the debut of the upcoming “Vera Rubin” super-chip platform. This system pairs a completely new custom ARM-based CPU (code-named Vera) with a breakthrough GPU architecture (code-named Rubin) under one extensive rack-scale framework. The result? A seismic shift in AI computing power and memory bandwidth – designed for training and inference at scales previously dreamed but seldom delivered.

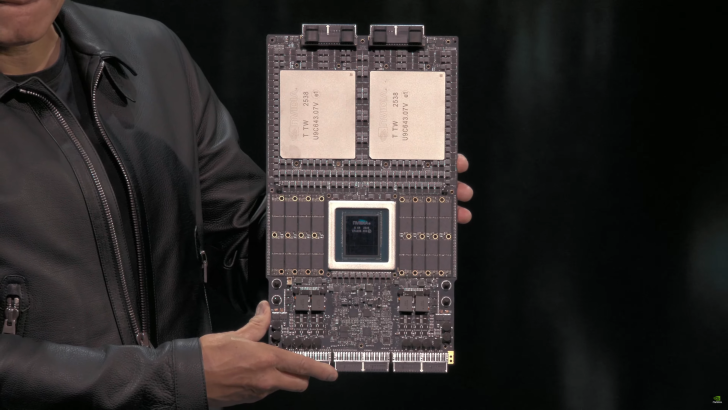

### A closer look at the architecture

NVIDIA’s announcement reveals that the Vera CPU packs an astonishing 88 custom ARM cores and supports 176 threads, connected through a high-bandwidth NVLink interface rated at 1.8 TB/s. :contentReference[oaicite:2]{index=2} Meanwhile, the Rubin GPU embraces next-gen packaging and memory: each chip comprises two reticle-size dies, supplied by TSMC, and each supports up to eight HBM4 memory sites in the first wave. :contentReference[oaicite:4]{index=4}

The first platform built around these chips bears the name NVL144, indicating a rack containing 144 GPU modules. NVIDIA says this system is capable of delivering up to 3.6 exaflops of FP4 inference performance and 1.2 exaflops of FP8 training performance – roughly 3.3× the capabilities of the predecessor Blackwell Ultra NVL72 platform. :contentReference[oaicite:5]{index=5} In addition, the memory architecture is scaled significantly: the platform supports 13 TB/s of HBM4 bandwidth, 75 TB of “fast memory” across the system, and interconnect speeds reaching 260 TB/s NVLink and 28.8 TB/s across racks with CX9. :contentReference[oaicite:6]{index=6}

### And what’s coming next: the Rubin Ultra wave

NVIDIA isn’t stopping with NVL144. The company has already outlined a follow-on platform for the second half of 2027 known as NVL576. That rack will contain 576 Rubin GPUs – four dies per GPU – together delivering up to 15 exaflops of FP4 inference and 5 exaflops of FP8 training. Memory bandwidth climbs to 4.6 PB/s of HBM4(e), with the total “fast memory” nearing 365 TB. NVLink and CX9 interconnects scale even further – to 1.5 PB/s and 115.2 TB/s respectively. :contentReference[oaicite:7]{index=7} The numbers are eye-watering and reflect NVIDIA’s ambition to dominate next-generation AI workloads from the ground up.

### Why this matters

For one, the integration of a custom CPU and GPU under a unified architecture signals a new era of AI-centric hardware design. By controlling both ends of the stack, NVIDIA gains tighter coupling of memory, communication, compute, and system-level performance. Moreover, the jump from one exaflop-level rack to multiple exaflops and then tens of exaflops is indicative of how quickly AI scaling demands are growing. According to NVIDIA’s roadmap, these platforms are not only about brute-force compute – they’re about meeting next-gen AI models that demand longer context windows, more tokens per second, and richer reasoning capability. :contentReference[oaicite:8]{index=8}

Another key point: memory bandwidth and capacity have become major bottlenecks for large-scale generative AI models. The use of HBM4 and HBM4e, massive fast-memory pools, and ultra-high interconnect throughput all point to a system built for future-proofing deep learning, large-language models, multi-modal AI, and simulation workloads alike. As one compute-industry analyst puts it: “This platform is designed to collapse simulation, data and AI into a single, high-bandwidth, low-latency engine.” :contentReference[oaicite:9]{index=9}

### Production timeline and what to watch for

NVIDIA expects mass production of Rubin GPUs to begin in 2026 (Q3 or Q4) for the NVL144 platform, with Rubin Ultra arriving in the second half of 2027. :contentReference[oaicite:10]{index=10} Given how rapidly AI startups, hyperscalers, and HPC centres are deploying today, that gives roughly one year for ecosystem firms to gear up around software, cooling infrastructure, and power delivery. One noteworthy point: a rack for the NVL576 system is projected at ~600 kW of power draw – underscoring the infrastructure demands of ultra-scale AI. :contentReference[oaicite:11]{index=11}

### Considerations and possible caveats

While the Vulkan-style spec sheet is impressive, there are a few realities to bear in mind. First, many of the numbers refer to FP4 or FP8 numerical formats – precision types tailored for AI inference and training rather than traditional double-precision HPC workloads. For example, the upcoming U.S. Department of Energy “Doudna” system being built around the Vera Rubin architecture warns that double-precision (FP64) compute is significantly lower in some cases, because the design targets AI first. :contentReference[oaicite:13]{index=13} Secondly, early sampling and production always carry risks: yield, thermal cooling, interconnect validation, software stack readiness, and power/infrastructure constraints can all influence actual deployment timelines and performance ceilings.

### What it means for the broader AI and tech scene

From a competitive standpoint, NVIDIA’s roadmap sets a very high bar. For example, the recently unveiled new hardware from AMD shows FP4 rack systems climbing to a few exaflops – but still short of the 3.6 exaflops of NVL144 or 15 exaflops of NVL576 at this stage. :contentReference[oaicite:15]{index=15} That gap demonstrates how critical scale, system integration and memory bandwidth are becoming. On the software side, this means AI model developers must prepare for architectures where compute isn’t the only bottleneck – data movement, memory capacity, cooling and interconnect latency will matter equally.

### Final thoughts

The Vera Rubin platform marks a bold statement: the era of data-centre AI hardware is shifting from incremental upgrades to disruptive change. By tightly combining a new custom CPU, high-density GPU packaging, next-gen memory stacks and lead-edge interconnects, NVIDIA aims to deliver infrastructure ready for the most demanding generative AI and reasoning workloads. If all goes to plan, the racks of 2026-27 may well look very different from today’s hardware – not just bigger, but architected from the ground up for the AI-first era.

As one comment captured below, this hardware may feel like science fiction – but it’s rapidly becoming reality. And that reality is shaping the next wave of intelligent systems.

1 comment

Man the die size is absurd on that gpu core!!!!