NVIDIA Rubin CPX GPU Ushers in the Era of Million-Token AI and Next-Level GenAI Performance

NVIDIA has once again raised the stakes in the race for artificial intelligence computing power with the introduction of its Rubin CPX GPU, a processor specifically engineered to tackle tasks that were once considered impossible. From million-token coding assistants to generative video at scale, the Rubin CPX is not merely an upgrade – it represents a new category of GPU designed to handle massive-context AI workloads.

The Rubin CPX arrives as part of NVIDIA’s broader Rubin platform, which also includes the upcoming Vera CPUs and the ConnectX-9 SuperNICs. All of these pieces will come together inside the NVIDIA Vera Rubin NVL144 CPX platform, a powerhouse MGX system that promises to redefine what data centers can achieve. According to NVIDIA, this rack-sized monster will deliver 8 exaflops of AI compute, offering 7.5 times the AI performance of the current GB300 NVL72 systems, with 100 terabytes of ultra-fast memory and a bandwidth of 1.7 petabytes per second.

But what really sets Rubin CPX apart is its focus on long-context processing. For coding assistants, this means moving beyond simple auto-completion or snippet generation and enabling AI to truly understand, analyze, and optimize enormous software projects that involve millions of tokens. In the realm of video, the chip’s design allows AI models to process up to one million tokens for an hour of content – an order of magnitude increase over current capabilities. This leap matters because generative video, high-quality video search, and long-form content creation all demand handling huge sequences of data without cutting corners.

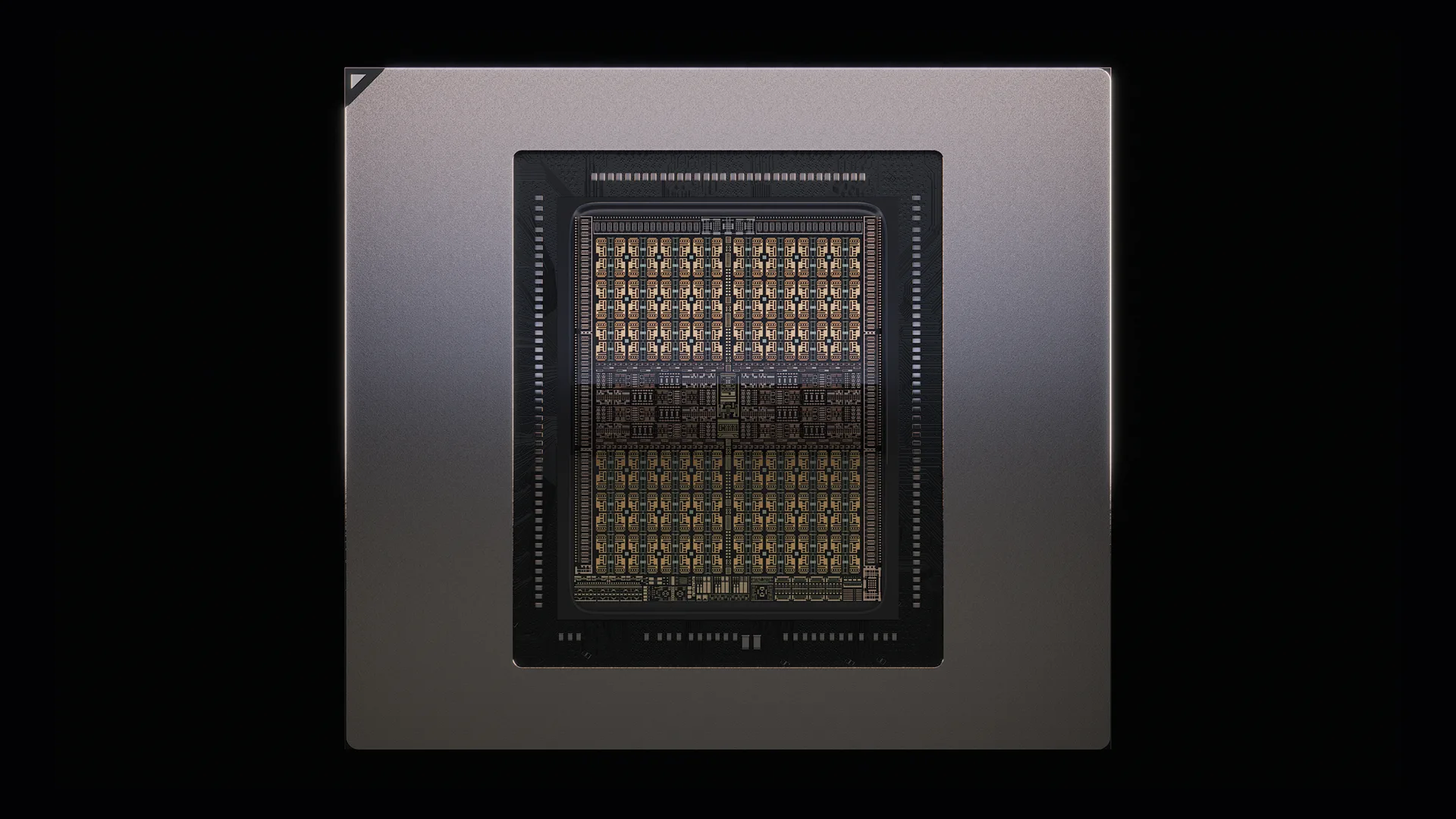

To achieve this, Rubin CPX integrates not just NVFP4 compute units but also dedicated video encoders and decoders within a single monolithic die. Unlike other upcoming Rubin family chips that rely on multiple reticle sizes (two for Rubin and four for Rubin Ultra), CPX embraces a streamlined monolithic approach that favors efficiency and cost-effectiveness. Interestingly, NVIDIA has chosen GDDR7 memory instead of HBM for the CPX GPU. At first glance this seems unusual for data center workloads, but NVIDIA emphasizes the balance between cost and performance: up to 128 GB of GDDR7 provides more than enough throughput for inference tasks while keeping deployments more affordable than HBM-based alternatives.

From a raw numbers standpoint, the Rubin CPX GPU promises 30 PFLOPs of NVFP4 AI compute power per chip. When scaled into the full NVL144 CPX system, customers get not only more compute but also smarter design trade-offs: a blend of speed, efficiency, and video-ready capabilities that align with modern AI workloads. The system’s memory design allows for 3x the attention performance compared to NVIDIA’s own GB300 NVL72, showing how far Rubin has leapt in a single generation.

To make the comparison clearer: the non-CPX Vera Rubin NVL144 platform, which pairs four Rubin GPUs and two Vera CPUs, tops out at 3.6 exaflops of NVFP4 compute, 75 terabytes of memory, and 1.4 PB/s bandwidth. The CPX version nearly doubles or triples these figures across the board: 8 exaflops compute, 100 TB of memory, and 1.7 PB/s bandwidth. In other words, NVIDIA is not just iterating but fundamentally redefining the architecture of its AI platforms.

Another highlight is the platform’s expanded video capabilities. With four times the NVENC and NVDNC capacity of its predecessors, Rubin CPX is positioned as a GenAI workhorse, ready to handle tasks ranging from synthetic training data generation to generative video content. This is crucial in a landscape where AI-driven media is becoming as central as text-based AI once was.

Looking ahead, NVIDIA has outlined a roadmap where Rubin CPX systems will become available by the end of 2026, with the Vera Rubin non-CPX systems expected slightly earlier in the second half of 2026. All of this is building toward an eventual unveiling of Rubin Ultra and, further out, the teased Feynman platform. The process technology for CPX has not yet been confirmed, but industry watchers anticipate TSMC’s N3 or even N2 nodes may be in play, ensuring cutting-edge performance and energy efficiency.

For developers, enterprises, and research institutions, the arrival of Rubin CPX signals more than just another upgrade cycle. It represents a paradigm shift: GPUs purpose-built to expand the horizons of what AI can process in one shot. Whether it’s optimizing codebases with millions of lines, generating high-fidelity long-form video, or powering massive conversational AI models, Rubin CPX is designed to push boundaries.

What’s clear is that NVIDIA is not resting on the laurels of the Grace Blackwell generation. With Rubin CPX, the company is aiming squarely at the AI workloads of tomorrow – workloads defined by unprecedented scale, complexity, and ambition.

2 comments

end of 2026 tho… thats a long wait 😭

gddr7 instead of hbm… ngl i thought thats a weird move but i guess makes sense if its cheaper