NVIDIA’s AI GPU Empire Faces New Pressures as Custom AI Chips Rise

For nearly a decade, NVIDIA has reigned supreme in artificial intelligence computing. Its GPUs power everything from ChatGPT’s training runs to the world’s most advanced autonomous systems. Yet, as energy costs soar and hyperscalers hunt for cost-efficient performance, cracks are beginning to show in the company’s once impenetrable fortress. The emergence of custom AI chips – known as application-specific integrated circuits (ASICs) – marks a pivotal moment for the industry, signaling both opportunity and disruption.

To unpack this shifting landscape, we revisited a detailed interview with Rahul Sen Sharma, President and Co-CEO of Indxx, a global index provider that tracks innovation sectors including AI semiconductors. Sharma offered a deep look at the balance of power between NVIDIA’s universal GPUs and the new generation of highly specialized AI silicon designed by the world’s largest tech firms.

The Unshakable Backbone of AI

NVIDIA’s dominance in the AI space is legendary. As of 2025, its GPUs control roughly 86% of the AI accelerator market, an achievement built not only on hardware prowess but on the strength of CUDA – the company’s proprietary software ecosystem. CUDA has evolved into the “lingua franca” of AI development, enabling engineers worldwide to build models optimized for NVIDIA’s hardware. That software moat has kept rivals at bay, particularly in China, where export restrictions might have otherwise crippled NVIDIA’s reach.

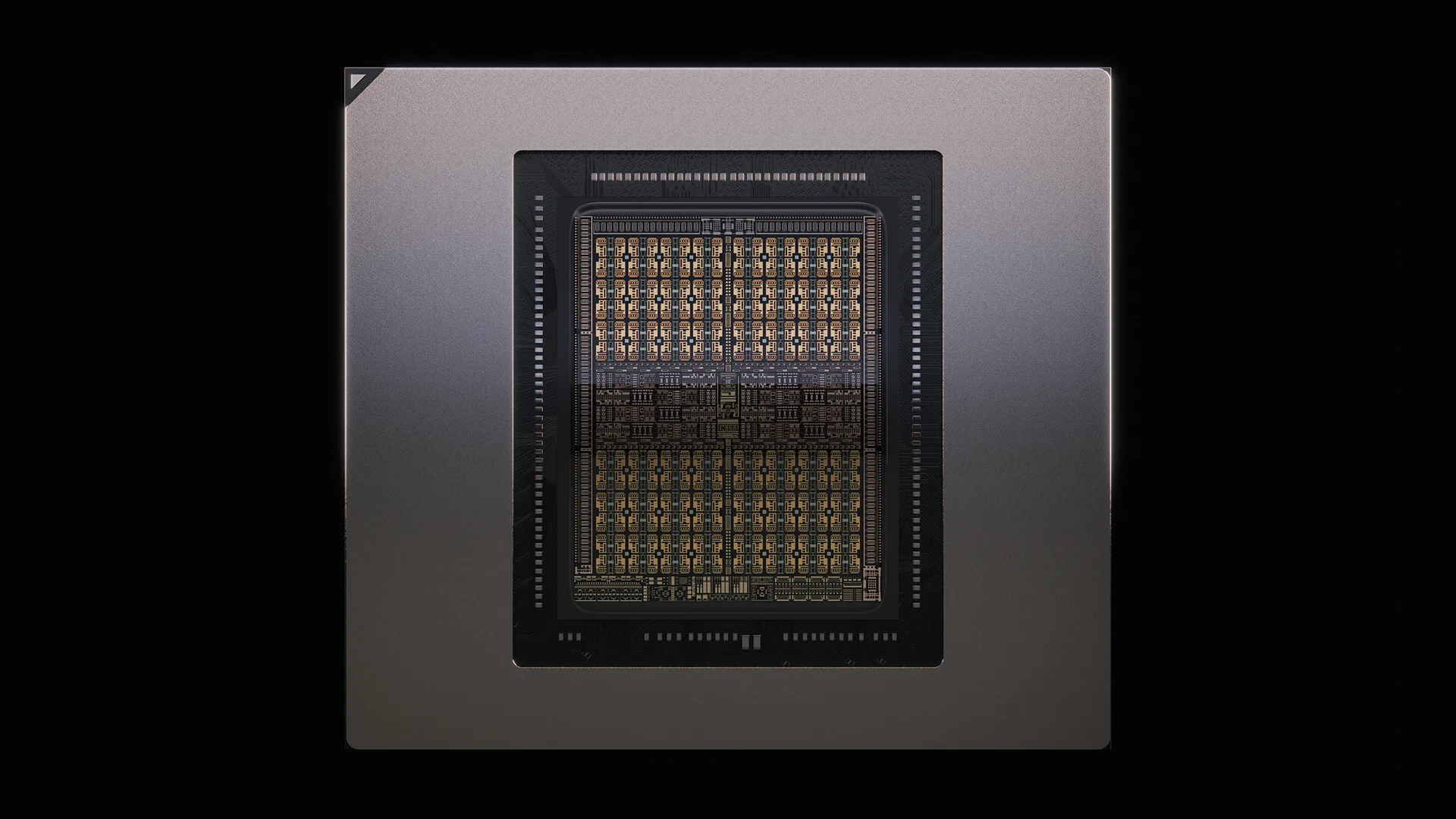

Its Blackwell series GPUs remain the default choice for model training and inference across global data centers. Whether it’s OpenAI’s massive language models or automotive AI stacks, NVIDIA’s technology forms the computational bedrock. “The combination of high-performance silicon and a mature, developer-friendly ecosystem makes NVIDIA indispensable,” Sharma noted. “It’s not just hardware – it’s a complete platform.”

However, the Monopoly Is Cracking

Despite this dominance, the winds are shifting. A new generation of chips is emerging, purpose-built for very specific AI workloads. These custom ASICs promise not just raw power, but efficiency – something increasingly vital as hyperscalers battle energy and cooling constraints.

OpenAI, for instance, is working with Broadcom to design chips tailored exclusively for inference, the process of running trained AI models at scale. Built on TSMC’s cutting-edge 3-nanometer process, these chips will initially be used in OpenAI’s own facilities rather than sold to the public. The idea: cut dependency on NVIDIA’s expensive, often scarce GPUs and build a tighter integration between hardware and software.

This isn’t an isolated trend. Google’s Tensor Processing Units (TPUs), Amazon’s Trainium and Inferentia, Microsoft’s Athena project, and Meta’s MTIA silicon all share the same goal – reduce costs, improve energy efficiency, and reclaim control from NVIDIA. Collectively, these initiatives mark a strategic realignment toward in-house innovation, signaling a future where AI workloads are increasingly divided between general-purpose GPUs and task-specific ASICs.

Broadcom: The Dark Horse Rising

Among NVIDIA’s potential challengers, Broadcom has quickly become the most credible contender. Once known primarily for networking and connectivity hardware, Broadcom’s pivot into AI silicon has paid off handsomely. Its AI-related revenue reached $4.4 billion in Q2 2025 – a staggering 46% year-over-year increase. Much of this growth stems from custom chips built for hyperscalers, alongside Ethernet switches that move data across sprawling AI data centers.

“Broadcom’s collaboration with OpenAI positions it as a serious player in inference hardware,” Sharma explained. The company’s strategy – anchored by its acquisition of VMware and partnerships with major cloud providers – gives it a foothold in both hardware and software integration. While NVIDIA’s entrenched ecosystem makes it hard to dethrone, Broadcom’s approach signals that the AI hardware world is no longer a one-company race.

Over the next few years, analysts expect Broadcom’s custom ASICs to carve out significant market share, particularly in data center inference workloads where cost and power efficiency trump absolute performance.

The Economics of Cost-Performance

AI’s explosive growth comes at an enormous financial and environmental price. As Sharma pointed out, “Scaling AI purely on performance is no longer sustainable.” Data centers now consume unprecedented amounts of power and water, straining both budgets and environmental resources. Hyperscalers – Amazon, Google, Microsoft, and Meta – are under mounting pressure to balance performance with efficiency.

Amazon’s Trainium exemplifies this balancing act. While not as powerful as NVIDIA’s latest GPUs on paper, Trainium delivers much lower total cost of ownership (TCO) and integrates seamlessly with AWS’s AI stack. This gives Amazon a long-term cost advantage and independence from GPU shortages. Similarly, Google’s TPUs and Microsoft’s Athena silicon follow the same philosophy: own the hardware that powers your AI to optimize both economics and control.

In practice, most hyperscalers now run hybrid setups – using NVIDIA GPUs for high-performance model training, while relying on their custom chips for inference and internal workloads. The shift toward cost-performance optimization has transformed how tech giants plan AI expansion. It’s no longer about being the fastest; it’s about being the smartest spender.

ASICs: The Next Leap in AI Hardware

When asked what improvements ASICs might see in the coming years, Sharma outlined several transformative directions:

- Energy Efficiency: Purpose-built design eliminates unnecessary circuitry, cutting energy usage by up to 30% versus CPUs or general GPUs. In power-hungry data centers, that’s a massive saving.

- Security Enhancements: Next-gen ASICs will incorporate tamper resistance and encryption-by-design to counter growing cybersecurity threats targeting AI infrastructure.

- Task Specialization: Unlike GPUs, which must handle diverse workloads, ASICs are tailored for specific tasks – such as inference or video processing – resulting in faster performance per watt.

- AI Integration: Expect ASICs to integrate more tightly with other AI systems like TPUs, enabling advanced real-time content understanding, from streaming video analytics to AI-assisted medical imaging.

- Memory Disaggregation: With technologies like Compute Express Link (CXL), ASICs can tap into shared memory pools, dramatically reducing wasted capacity and improving AI model scalability.

These factors make ASICs an indispensable part of the next wave of AI infrastructure – leaner, faster, and smarter by design.

Who Benefits From the ASIC Boom?

The adoption of ASICs is setting off a ripple effect across the tech ecosystem. Hyperscalers gain independence, while semiconductor manufacturers and equipment providers ride the wave of fresh demand. Sharma identified several key beneficiaries:

- Hyperscalers: Amazon, Google, Microsoft, and Meta each benefit by developing in-house chips that reduce NVIDIA dependency and optimize specific workloads.

- Foundries and Semiconductor Firms: TSMC and Samsung lead fabrication, while Broadcom, Marvell, AMD, and Intel are expanding into semi-custom AI chips and heterogeneous integration.

- EDA and Design Software Companies: Synopsys, Cadence, and Siemens EDA provide the design tools that make custom silicon possible.

- Memory and Interconnect Vendors: Companies like Micron, SK Hynix, and Rambus are retooling to support CXL-based architectures.

- Cooling and Power Specialists: Vertiv, Schneider Electric, and CoolIT Systems stand to gain from ASIC-driven data center upgrades.

Each layer of the stack, from chip design to data center cooling, is undergoing reinvention. In Sharma’s view, the next phase of AI growth will not just be about algorithms – it will be about physical infrastructure, efficiency, and sustainability.

Cooling Wars: Rubin GPUs Turn Up the Heat

NVIDIA’s newly announced Rubin and Rubin Ultra GPUs, revealed at GTC 2025, underscore this challenge. With per-rack power density hitting record highs, traditional air cooling is reaching its limits. “Rubin’s power profile is a game-changer for data center design,” Sharma observed. “You simply can’t run thousands of these chips with air cooling alone anymore.”

That’s where liquid and immersion cooling technologies step in. CoolIT Systems, a leader in liquid cooling, showcased new AI-ready coldplates capable of delivering up to 30% better thermal performance. Asetek, another veteran of the cooling world, continues to evolve its solutions for AI server environments. Meanwhile, industrial players like Vertiv and Schneider Electric are modernizing entire facilities to support hybrid cooling infrastructure – part air, part liquid.

As Rubin GPUs push thermal boundaries, the cooling market becomes the next battleground in AI infrastructure. Cooling innovation, once a backroom engineering topic, is now front and center in hyperscaler investment strategies.

The Bigger Picture

NVIDIA remains the titan of AI hardware, but the rise of custom chips marks a structural shift in computing economics. Energy constraints, environmental considerations, and supply limitations are forcing even the biggest companies to rethink how they scale AI. The coming years will see a dual-track evolution: NVIDIA’s powerful GPUs for flexible workloads, and highly specialized ASICs for efficiency-driven tasks.

In this evolving landscape, no single company will hold total control. Instead, a new equilibrium is forming – where efficiency, specialization, and innovation decide who leads the next phase of AI’s technological revolution.

“NVIDIA won’t vanish,” Sharma concluded. “But it will have to share the stage.”

4 comments

rubin gpu needs its own powerplant, crazy power draw!

asics are cool and all but dev support still miles behind gpu frameworks

nvidia might lead ai but it also leads in overheating lol my gpu sounds like a jet 😂

feels like ai hardware war is just getting started, gonna be fun to watch