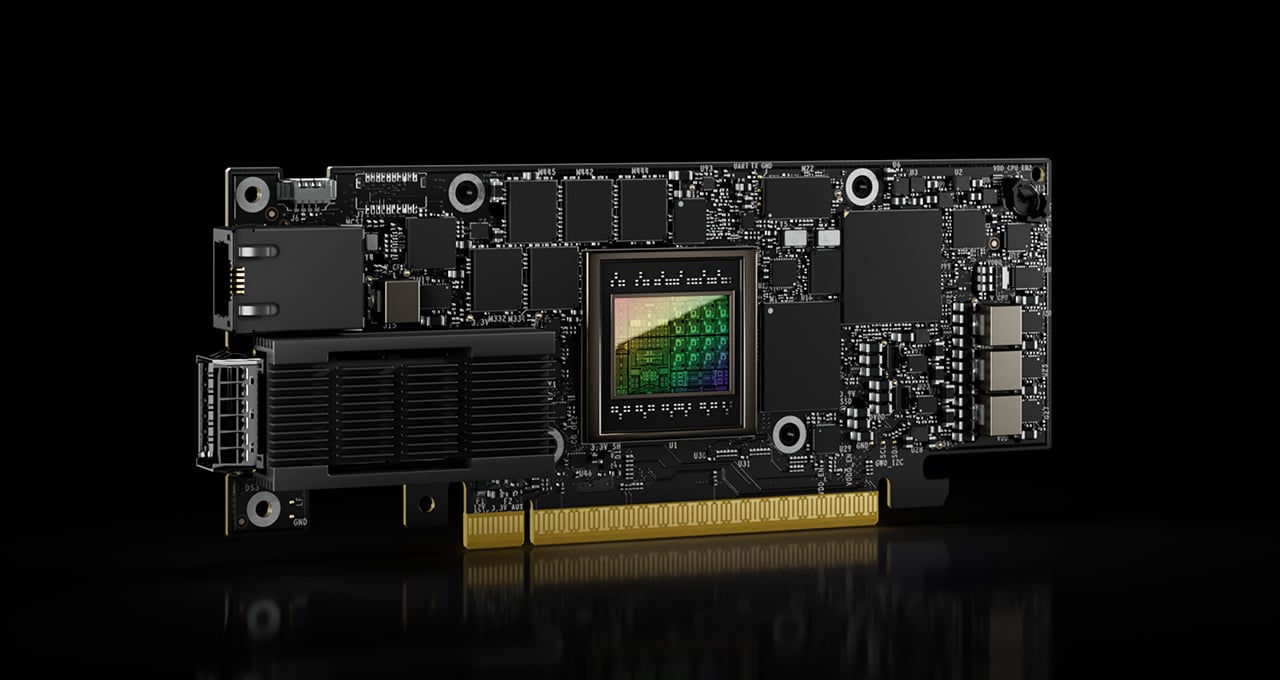

NVIDIA has unveiled the ConnectX-8 SuperNIC, a next-generation network interface designed to power its Blackwell GPU systems.

Far more than a conventional NIC, the ConnectX-8 is positioned as a dedicated ASIC bridging GPUs with massive AI clusters, ensuring both training and inference workloads run with extreme efficiency.

According to NVIDIA, AI training and inference place very different demands on networks. Training is long and synchronized, where tail latency can cripple efficiency. Inference, by contrast, is latency-sensitive and heavily interacts with the outside world. To handle both, ConnectX-8 introduces a fungible end-to-end network policy, ensuring adaptability across workloads.

The SuperNIC supports both Spectrum-X Ethernet and Quantum-X InfiniBand fabrics. It delivers 800 Gb/s throughput, powered by PCIe Gen6 with 48 lanes through an integrated PCIe switch. The hardware boasts integrated load balancing, congestion control, RDMA technology deployed across millions of GPUs, and enterprise-grade security. Its deep programmability and data-path acceleration (featuring a 16T RISC-V event processor) allow data centers to scale AI services more efficiently.

ConnectX-8 is first being deployed in NVIDIA’s Blackwell GB300 NVL72 systems, which also combine Grace CPUs, Blackwell Ultra GPUs, and NVLINK C2C bandwidth sharing. Each NVL72 board features dual CX8 PCIe switches to optimize bandwidth and QoS between CPUs, GPUs, and storage.

Performance figures underline its potential: Spectrum-X Ethernet integrated with ConnectX-8 achieves up to 1.6x higher effective bandwidth, 2.2x higher all-reduce bandwidth, and virtually zero tail latency compared to standard RDMA NICs. Telemetry collection is reportedly up to 1000x faster.

Unlike competing approaches such as AMD’s 800G Ethernet IP cores – essentially building blocks for custom hardware – NVIDIA is delivering ready-to-deploy, fully integrated hardware for AI-scale networking. With ConnectX-8, the company is betting on turnkey solutions that scale immediately across massive GPU clusters, positioning its Blackwell systems as the backbone of next-generation AI infrastructure.

4 comments

average NIC convo: one side screamin, other side enjoyin the ride 🤣

nah, AMD thing is just an IP core, Nvidia actually has hardware ready to drop, learn before u type 🤦

Trash. plain and simple

as long as it spits fake frames and more latency, Nvidiots on wccftech clap 👏