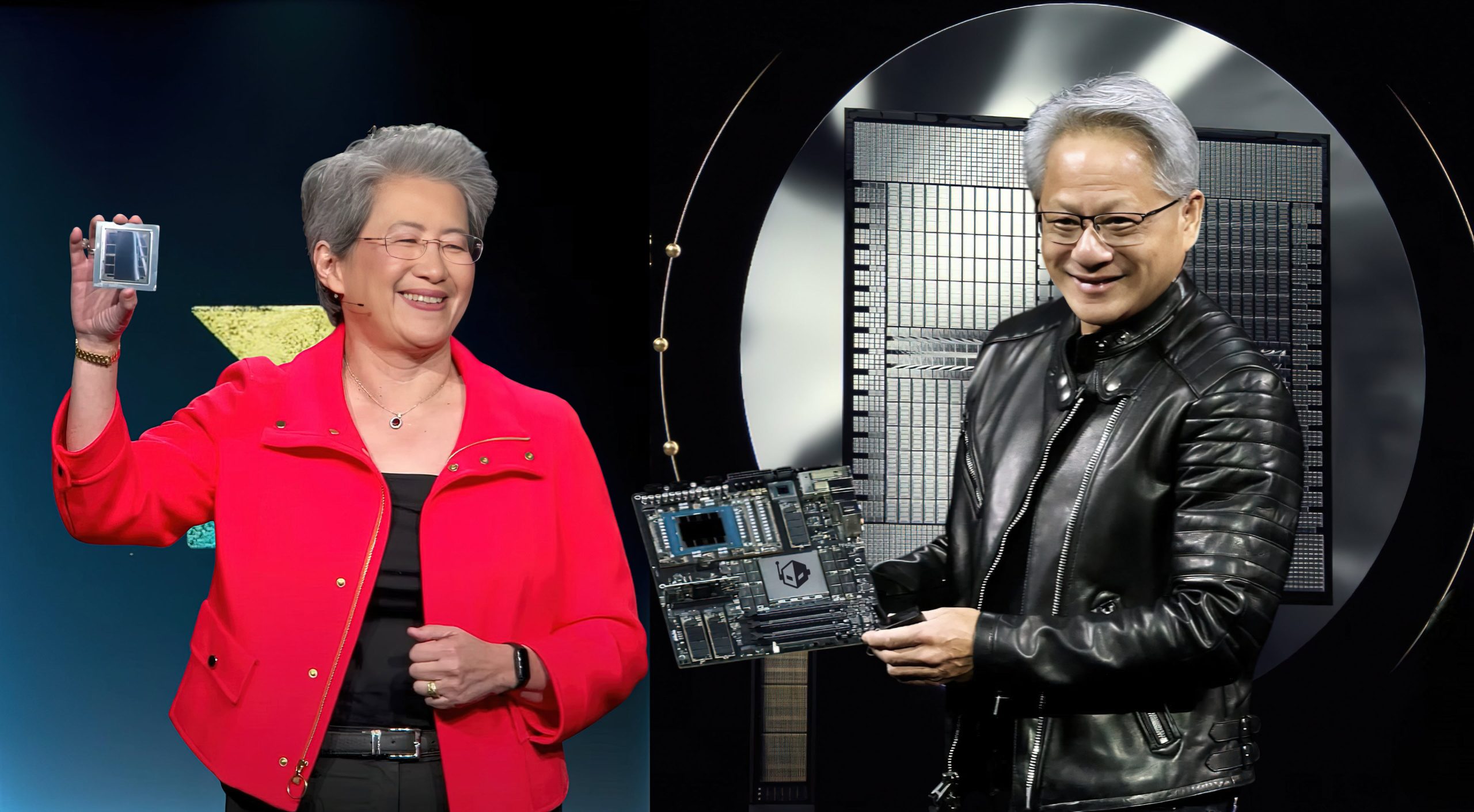

The latest MLPerf v5.1 AI Inference benchmarks have arrived, and they’ve brought some fireworks to the world of high-performance computing. The spotlight this time falls squarely on NVIDIA’s new Blackwell Ultra GB300 GPU and AMD’s Instinct MI355X accelerator – two chips that represent the best of what each company has to offer in large-scale AI workloads.

While Intel also had its Arc Pro B60 in the mix, the real duel in this round was between NVIDIA and AMD, both pushing the limits of performance and efficiency.

NVIDIA’s Blackwell Ultra GB300 makes an immediate impression with sheer brute force. In the DeepSeek R1 (Offline) test, a 72-GPU GB300 setup posted a remarkable 420,569 samples per second, compared to 289,712 on the previous-generation GB200. That’s a 45% uplift, nearly matching NVIDIA’s claim of a 50% generational improvement. Even on smaller 8-GPU clusters, the GB300 delivered a similar 44% advantage. In practical terms, this means models can train and serve in tighter loops, lowering latency and pushing inference throughput to new highs.

When switching to the DeepSeek R1 (Server) mode – where the challenge shifts to handling dynamic query loads – the GB300 maintained its lead, with a 25% performance bump on the 72-GPU configuration and a 21% gain on the 8-GPU setup. These are not minor uplifts but significant leaps that allow enterprises running interactive AI workloads, from chatbots to large-scale recommendation engines, to serve more queries without expanding datacenter footprint.

AMD’s Instinct MI355X, meanwhile, came to MLPerf with something to prove – and it delivered. On Llama 3.1 405B (Offline), an MI355X 8-GPU run clocked 2,109 tokens per second, a solid 27% gain over NVIDIA’s GB200 in the same class. This marked AMD’s statement that its Instinct line isn’t just competitive – it’s aggressively pushing into workloads where NVIDIA has traditionally held dominance.

Perhaps most impressive was the Instinct MI355X’s showing in Llama 2 70B (Offline). With 64 accelerators, AMD’s system churned out 648,248 tokens per second, dropping to 350,820 at 32 chips and 93,045 at just 8 GPUs. To put that into perspective, the NVIDIA GB200 delivered 65,770 tokens per second at 8 GPUs, meaning AMD’s platform outpaced it by more than twofold in that slice. These kinds of results suggest that MI355X is particularly well-optimized for generative transformer workloads, making it a strong candidate for companies building advanced language models.

Intel’s Arc Pro B60 also deserves a mention. Though its raw numbers were modest (around 3,009 tokens per second on Llama 2 70B), the card isn’t targeting hyperscale AI in the same way as NVIDIA and AMD. Instead, its appeal lies in cost efficiency and flexibility for smaller deployments, giving organizations an entry point into accelerated inference without datacenter-scale investments.

NVIDIA isn’t stopping at raw throughput either. The company highlighted how its Blackwell Ultra GPUs set reasoning records in MLPerf, with a 4.7x lead in offline mode and 5.2x lead in server tests compared to the Hopper generation. This positions GB300 as not only a faster chip but also a more capable one when tackling increasingly complex reasoning tasks in AI models. That kind of capability resonates with enterprises experimenting with advanced multi-modal systems where logic and inference matter as much as speed.

Looking ahead, the benchmark war is far from settled. Both NVIDIA and AMD are expected to fine-tune software stacks, optimize kernels, and leverage architectural updates in the next rounds of MLPerf. Intel, too, could surprise with further refinements in its Arc series. For end users, the message is clear: AI acceleration is evolving at a breakneck pace, and choosing between platforms may come down less to raw numbers and more to workload compatibility, software ecosystem, and total cost of ownership. One thing is certain: the bar for AI inference has been raised dramatically.

3 comments

datacenter bills gonna cry with these monsters

waiting for mlperf v6.0 to see the rematch

intel arc b60 feels like budget gpu but nice for small labs