AMD has fired a serious shot in the AI hardware race with its Instinct MI350 series, revealed in detail at Hot Chips 2025.

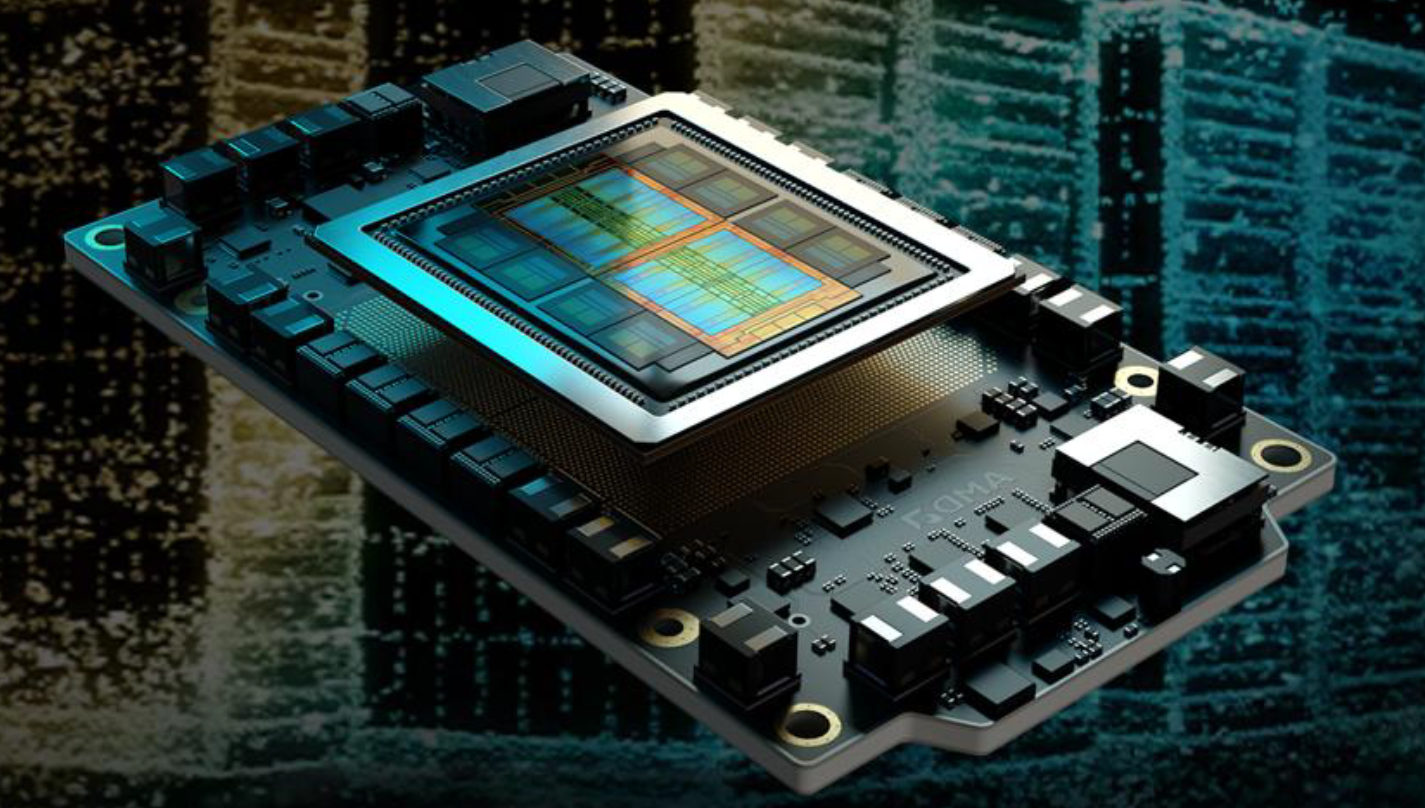

Built on the CDNA 4 architecture and a 3nm + 6nm 3D chiplet design, the MI350 family is designed to crush large-scale AI workloads and compete head-to-head with NVIDIA’s most advanced accelerators.

The MI350 lineup exists because large language models (LLMs) keep ballooning in size. AMD’s answer was simple: new data formats and massive memory expansion. The result? Accelerators that push past 4000B parameter models, thanks to 288GB of HBM3e memory and lightning-fast bandwidth.

Two main models anchor the series. The MI350X is the 1000W air-cooled option, clocked at up to 2.2 GHz. For datacenters chasing peak performance, the MI355X raises the stakes with liquid cooling, 1400W TBP, and clocks up to 2.4 GHz. Both are built on a staggering 185 billion transistors, structured into 8 Accelerator Complex Dies (XCDs) on TSMC’s 3nm process, paired with dual 6nm I/O dies.

Memory performance is equally brutal: eight stacks of HBM3e deliver 288GB at up to 8 TB/s, supported by AMD’s Infinity Fabric with 5.5 TB/s of bisection bandwidth and a new 256MB Infinity Cache. Compute numbers are nothing short of wild – the MI355X delivers up to 80.5 PFLOPs in FP8 and 20 PFLOPs in FP4/FP6, representing a 4x generational leap. In inference workloads, AMD claims a jaw-dropping 35x gain on Llama 3.1 405B compared to the MI300 series.

Flexibility is another key strength. AMD allows GPU and memory partitioning, enabling up to eight 70B model instances on a single accelerator cluster. The chips scale across eight accelerators per UBB board, tied together with high-speed Infinity Fabric links. System builds integrate seamlessly with 5th Gen EPYC CPUs and Pensando NICs for full datacenter deployment.

How does it stack up? Against NVIDIA’s GB200, AMD claims 2x FP64 performance, 1.6x higher memory, and parity in FP8 while adding new FP6/FP4 formats. Compared to its own MI300X, the MI355X represents a generational leap that’s hard to ignore.

Availability is locked for Q3 2025 through AMD’s ecosystem partners, with the next-gen MI400 already teased for 2026. For now, though, the MI350 is AMD’s statement piece: a heavyweight AI accelerator designed not just for benchmarks, but for the swelling reality of trillion-parameter models.

3 comments

damn lisa su is the woman 🔥🔥

35x uplift on llama? if true, that’s nuts

AMD is finally eating Nvidia’s lunch… ngreedia crying rn 😂