NVIDIA’s proposed mega-deal with OpenAI has been framed as the ultimate symbol of the artificial intelligence boom: a potential partnership worth up to $100 billion that would see a vast 10-gigawatt build-out of next-generation “Vera Rubin” compute systems. On paper, it looks like the kind of once-in-a-generation agreement that could lock in demand for NVIDIA’s chips and cement its status as the backbone of the global AI infrastructure.

Yet buried in NVIDIA’s latest 10-Q filing is a reminder that has made investors and industry watchers pause: none of this is guaranteed.

The company openly cautions that negotiations with OpenAI, as well as with other major partners such as Intel and Anthropic, may never result in definitive, binding contracts. Even if they do, the eventual scope, timing, and economics of those deals could end up very different from what is currently being discussed behind closed doors and celebrated in headlines.

Legal teams include that kind of language in almost every large corporate filing, but the scale of this particular opportunity makes the disclaimer far more striking. A 10-gigawatt AI build-out is not just another cluster in a rented hall; it represents the electricity demand of several large power plants, plus billions of dollars in GPUs, networking fabric, cooling, land, and construction. For skeptics who already see NVIDIA as the poster child of an overheated AI trade, the idea that such a colossal plan still rests on “maybe” money reinforces their view that the sector is flirting with bubble territory. In online discussions, some retail investors complain that the company is chasing “AI castles in the sky” and accuse it of letting OpenAI hold all the cards.

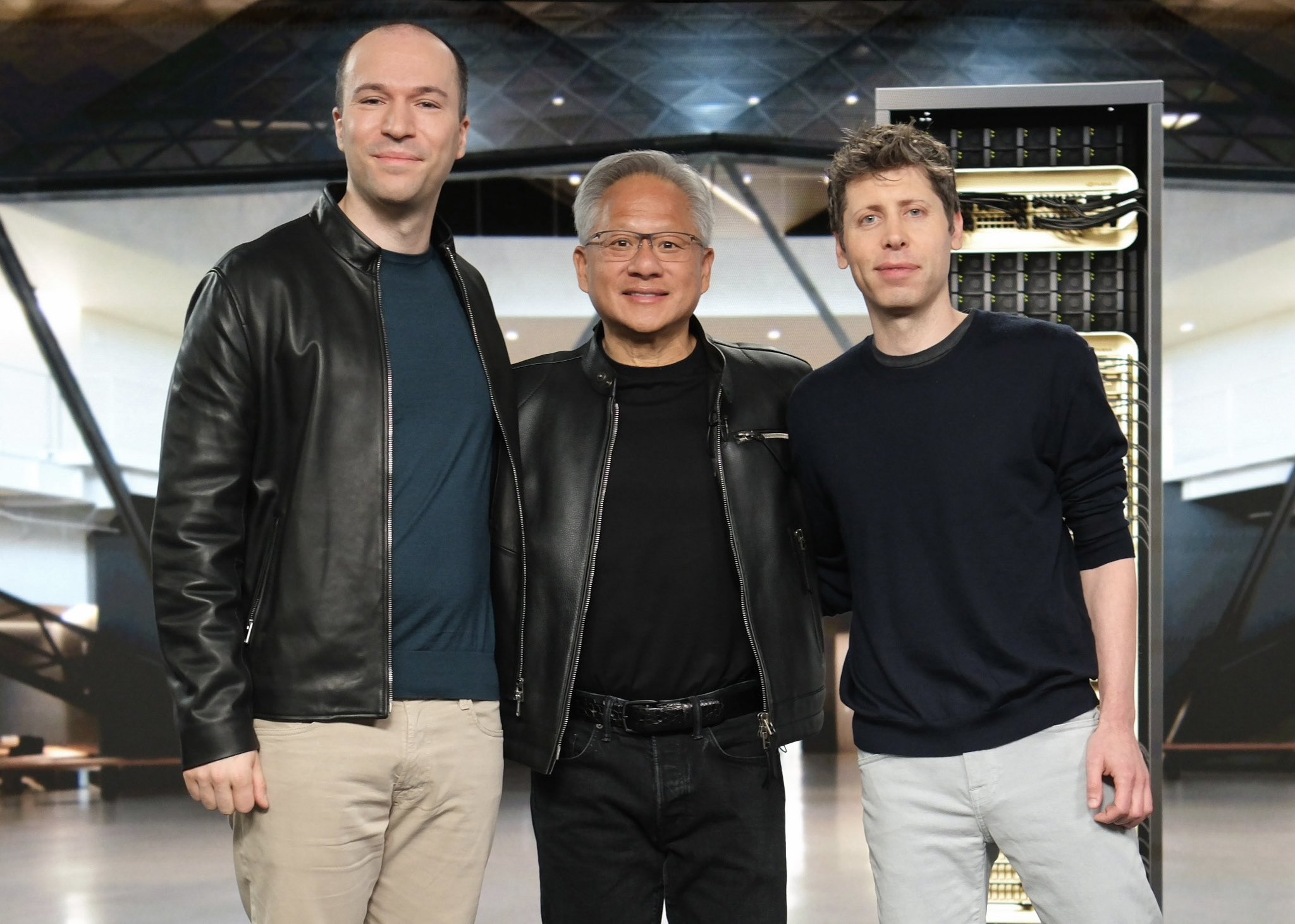

NVIDIA’s top brass knows those doubts are out there. Speaking to Bloomberg after the company’s latest earnings, CEO Jensen Huang pushed back against the idea that NVIDIA is blindly riding hype. He described both NVIDIA and OpenAI as being deliberately cautious, trying to align any massive infrastructure build with concrete visibility into long-term demand and OpenAI’s actual financing capacity. Ambition is not the problem, he implied; the real discipline lies in refusing to pour concrete and install racks until the economics, funding, and customer pipeline truly line up.

That approach is essential because AI infrastructure has become one of the most capital-intensive gambles in modern technology. OpenAI’s trajectory illustrates both the promise and the uncertainty. The company has amassed an enormous user base for its AI services, claiming hundreds of millions of weekly users and enjoying what NVIDIA characterizes as healthy gross margins. But converting that popularity into stable, predictable, multi-year revenue streams is a very different challenge. Subscriptions can be downgraded, pilots can stall, enterprise budgets can be squeezed, and regulators can suddenly reshape entire business models. NVIDIA, as the supplier of the expensive hardware at the foundation of all this, has to plan not just for today’s exploding demand, but also for the very real possibility that growth slows or reverses.

Beyond the financial unknowns, there is the physical reality of building AI at planetary scale. A 10-gigawatt footprint implies staggering power consumption, extensive grid upgrades, and huge volumes of cooling, often relying on scarce fresh water. Even AI enthusiasts are beginning to worry that the current pace of data-center construction could strain already fragile power systems in key regions. Some of the loudest voices in comment threads point out that while tech giants dream of ever-larger “AI campuses,” ordinary households in some countries still struggle with basic grid reliability. If energy prices spike, or communities push back against water-hungry facilities, projects of the size NVIDIA and OpenAI are sketching out could be delayed, downsized, or forced to relocate.

Environmental and social constraints are only one part of the risk. Another is macroeconomic: valuations across the AI ecosystem have surged to levels that remind many observers of previous bubbles. Critics argue that today’s expectations for AI-driven productivity and profit are being priced in long before real-world efficiency gains have been fully proven. In the more pessimistic corners of the market, commentators predict that an AI downturn could trigger the next major financial shock, especially if indebted companies and over-leveraged investors discover that the cash flows behind all this silicon fall short of the story that was sold to them.

Against that backdrop, NVIDIA is trying to walk a fine line. On one side, it continues to design the most coveted GPUs in the world and to pitch gigantic clusters based on its upcoming Vera Rubin systems as the natural foundation for the next wave of generative AI. Securing OpenAI as a flagship customer for that platform would send a powerful signal to cloud providers, enterprises, and governments that NVIDIA’s ecosystem remains the default choice for serious AI work. On the other side, its cautious wording in the 10-Q is a reminder that not every eye-catching “opportunity” will show up later as recognized revenue.

The reference to OpenAI, Intel, and Anthropic in that filing is not so much a red alert as a reality check. Deals can shrink or be staggered over longer timelines. Partners can change strategic direction, pursue custom silicon, or face their own funding challenges. Governments can roll out new rules around data, energy, or competition that reshape the economics of AI build-outs overnight. By spelling out those uncertainties, NVIDIA is effectively telling investors that it will not commit tens of billions of dollars in capacity without a clear path to sustainable returns, no matter how euphoric the market becomes.

Ultimately, the story here is bigger than whether one $100 billion agreement is signed in its original form. The deeper question is whether the world can sustainably build, power, cool, and pay for the AI infrastructure implied by such numbers. NVIDIA’s restrained language suggests an uncomfortable but important truth: the limiting factor for the next phase of AI may not be algorithms or enthusiasm, but capital, electricity, water, and regulatory patience. For investors trying to gauge how long the AI boom can run, and for policymakers wrestling with its environmental and economic costs, that may be the most important message hidden in the fine print.

1 comment

Reading that 10-Q felt like: ‘here’s our $100B dream, also pls don’t sue us if none of it happens’. Lawyers winning again