NVIDIA is quietly rewriting the rules of the memory market. After years of building AI accelerators around traditional DDR based platforms, the company is now preparing to load its next wave of data center hardware with LPDDR, the low power DRAM you normally find in smartphones and ultra thin laptops. On paper it looks like a smart engineering choice for massive AI clusters.

In practice, it risks blowing up the already fragile supply of consumer memory and pushing prices for PC and mobile buyers into territory that once sounded like a bad meme.

For months, DRAM manufacturers have warned that we are entering an era of shortage. Demand from AI data centers has exploded faster than capacity expansions can keep up, and what originally looked like a temporary squeeze has turned into a structural imbalance. New fabs take years, not quarters, to come online, and in the meantime hyperscalers and AI vendors are signing every long term supply agreement they can get. NVIDIA pivoting toward LPDDR effectively means one of the hungriest chip companies on the planet is jumping into the same memory pool as smartphone giants.

Analysts at Counterpoint describe this as a seismic shift for the supply chain, and the wording is not exaggerated. By treating LPDDR as primary fuel for AI servers, NVIDIA suddenly looks less like a niche high end GPU buyer and more like a top tier phone maker in terms of volume. The problem is that the LPDDR ecosystem was never designed to absorb both markets at full throttle. These parts were tuned for hundreds of millions of handsets, tablets and lightweight PCs, not for racks of AI accelerators that chew through terabytes of memory per node and keep doing it 24 hours a day.

Price forecasts already reflect that stress. Industry estimates suggest DRAM could jump as much as 50 percent over the next few quarters, on top of an earlier 50 percent year over year rise. In plain language, that means many buyers may effectively see memory prices double within a relatively short window. Suddenly those jokes about a 32 GB DDR5 kit costing as much as a mid range GPU do not sound quite so ridiculous. Some enthusiasts are openly bragging about hoarding five or six DDR5 kits in their drawer, half mocking, half convinced they have accidentally made the smartest investment of their PC building career.

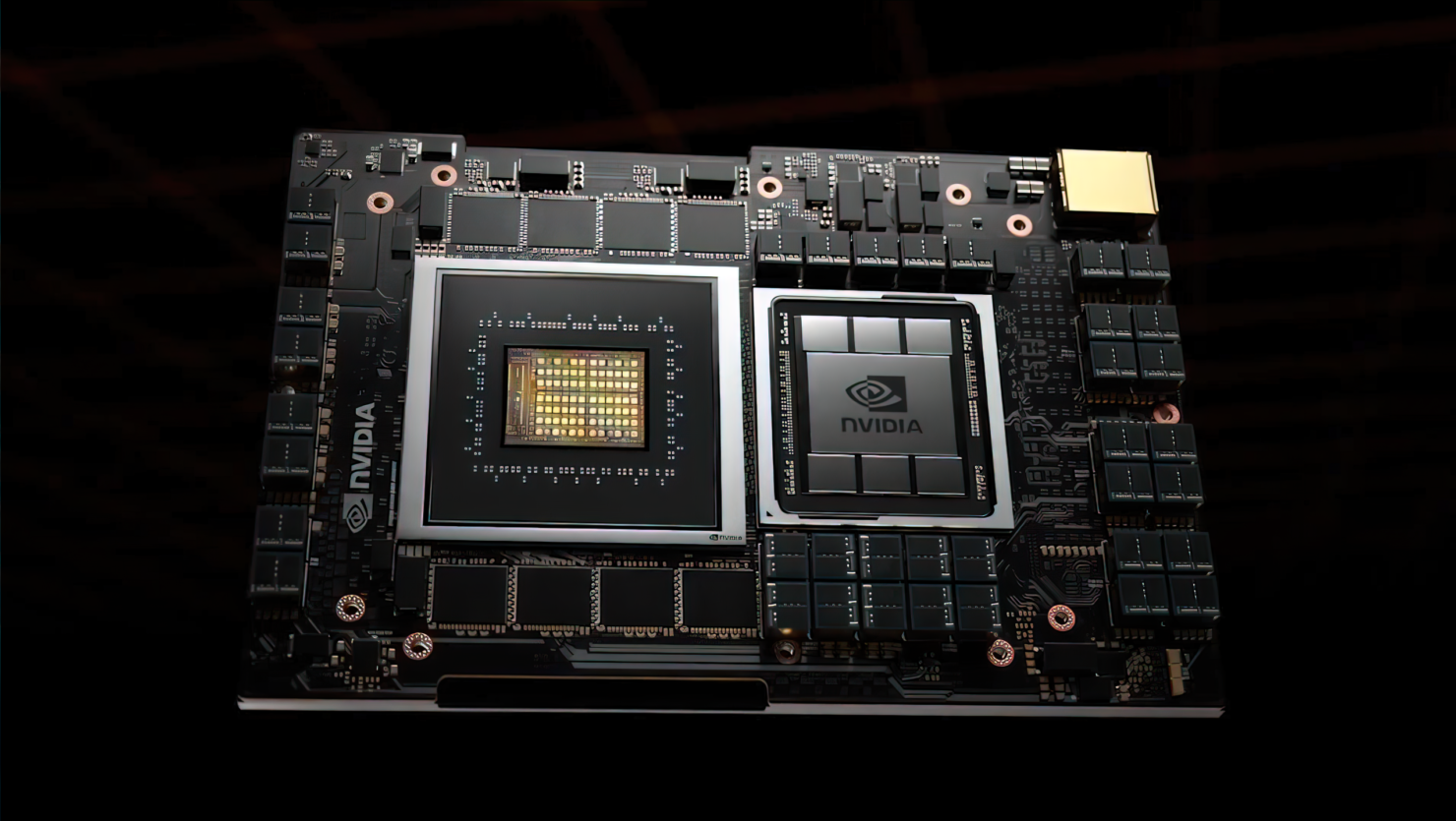

From NVIDIA perspective, the move to LPDDR is easy to justify. Low power DRAM offers significantly better energy efficiency per bit and can be paired with robust error correction schemes, which is critical when you are training large language models across tens of thousands of GPUs. Every watt matters in a hyperscale data center; shaving off power from memory can translate into millions of dollars saved in electricity and cooling over the lifetime of a cluster. If you are building the ultimate AI box, you pick the memory technology that delivers the most performance per watt, even if that means collateral damage in consumer markets.

Consumers, unfortunately, sit on the other end of that equation. LPDDR5 and its successors are already embedded in most flagship phones, gaming handhelds and ultra portable laptops, and high speed DDR5 dominates new desktop platforms. When one buyer starts siphoning off an outsized share of the wafers used for advanced DRAM, everything from HBM stacks for GPUs to LPDDR, GDDR and vanilla DDR modules ends up competing for the same limited capacity. Even registered DIMMs for servers are not magically immune. The result is a supply chain that stays highly constrained for months, if not years, regardless of whether you are trying to build an RTX powered workstation or just upgrade a budget gaming rig.

There is still plenty of speculation about how this plays out. Some observers argue that if NVIDIA leans heavily into LPDDR, demand for traditional DDR5 in AI servers will fall, leaving more modules for consumer desktops and gaming laptops. Others counter that the company will simply take whatever it can get, bouncing between LPDDR and DDR5 depending on what is available, and hoovering up everything high speed in the process. In more pessimistic corners of the community, people joke that we may end up back on old DDR4 platforms simply because new memory is either sold out or priced into absurdity.

There is also a political angle. When one vendor appetite for AI infrastructure starts warping global supply chains, talk of regulatory scrutiny is never far behind. In comment threads you already see frustrated users suggesting that governments may eventually be forced to step in if a single company can distort prices for such a foundational component. Others take a calmer view, arguing that the current frenzy has a whiff of artificial scarcity and will fade as new fabs ramp and AI demand normalizes sometime around 2026. Until then, the uncomfortable truth is that AI workloads currently need more high end memory than the industry can manufacture.

For everyday buyers, the best strategy depends on timing. If you are sitting on an older platform and planning a full upgrade this year, it may be safer to lock in RAM prices sooner rather than later, before another round of contract hikes trickles down to retail shelves. That is exactly why threads are full of half serious comments telling people to buy it now or to stop being poor if they skipped cheap DDR5 during the last dip. On the other hand, if your system is already running 32 GB or more and you are not chasing record breaking benchmarks, you can probably ride out the turbulence and wait for the next price cycle.

What is clear is that NVIDIA shift toward LPDDR in AI servers is much more than an obscure design tweak. It is a catalyst amplifying deep structural changes in the memory industry, accelerating the push toward higher density, more efficient DRAM while exposing how fragile the supply chain still is. Shortages have a way of spurring innovation as engineers search for smarter architectures and more efficient manufacturing, but they also leave a trail of frustrated PC builders and smartphone buyers along the way. Until supply catches up, expect DRAM to be the surprising bottleneck that shapes not only the future of AI, but also the pace at which everyday computing can afford to move forward.

2 comments

Imagine walking into a store and seeing 32 GB DDR5 priced like a used GPU, my wallet started crying before my eyes did

I am just gonna ride DDR4 into the sunset, yall can fight over LPDDR5 and HBM scraps in 2025 😂