AMD Instinct MI400 and MI500 roadmap challenging NVIDIA’s AI GPU dominance

In just a few years, AI GPUs have gone from niche accelerator cards to the most fought-over silicon on the planet. NVIDIA still owns most of that market with Hopper, Blackwell and the upcoming Vera Rubin platforms, but AMD has stopped playing catch-up and is now laying out an aggressive, year-by-year counterattack. At its 2025 Financial Analyst Day the company finally detailed how the Instinct MI400 family landing in 2026 and the MI500 generation lined up for 2027 are supposed to turn team red into a real alternative for hyperscalers, cloud providers and governments that do not want to bet their entire future on a single vendor.

Instead of sporadic big-bang launches, AMD is promising a predictable annual cadence for its data-center GPUs, mirroring the rhythm NVIDIA has used so effectively. The current CDNA 3 and CDNA 4 based Instinct parts such as MI300X, MI325X and MI350X have already pushed AMD into serious consideration for training large language models, recommendation engines and traditional high performance computing. Now MI400 on CDNA 5 and MI500 on a next-generation CDNA Next/UDNA architecture are framed as the next two rungs on the ladder: each step bringing more compute, more memory and more bandwidth to keep feeding the ferocious appetite of modern AI models.

Instinct MI400: CDNA 5, HBM4 and a focus on rack-scale AI

The Instinct MI400 series is AMD’s headline act for 2026. Built on the CDNA 5 architecture, MI400 is not just a small refresh over MI350; AMD is promising roughly double the compute capability. The flagship configuration is rated at around 40 petaFLOPs of FP4 throughput and 20 petaFLOPs at FP8, targeting both ultra-dense inference and large-scale training. In practice that means MI400 should be able to run cutting-edge language and vision models with heavier quantization while preserving accuracy, allowing cloud operators to squeeze more tokens, images and video frames out of every watt and every rack unit.

Raw compute is only half the story. Where MI400 becomes genuinely interesting is the memory subsystem. AMD is moving the family to HBM4, bumping capacity from 288 GB of HBM3e on MI350 to a massive 432 GB per GPU. That 50 percent uplift is paired with an advertised 19.6 TB/s of memory bandwidth, more than twice what MI350 offered. For modern generative AI models that can easily exceed a trillion parameters in large deployments, memory capacity determines how much can be kept on-device, while bandwidth controls how often those parameters can actually be touched. MI400’s combination of capacity and speed is clearly targeted at customers who are currently running into out-of-memory crashes or destructive model sharding on older accelerators.

Bandwidth does not stop at the package boundary either. AMD is putting a lot of emphasis on scale-out performance, quoting around 300 GB/s of interconnect bandwidth per GPU for multi-accelerator configurations. Crucially, the company is leaning into standard-based rack-scale networking with technologies such as UALoE, UAL and UEC rather than forcing a completely proprietary fabric. The subtext is clear: AMD wants to be seen as the vendor that can plug into existing data-center designs instead of requiring an entire new, vendor-locked ecosystem to build what marketing people now love to call AI factories.

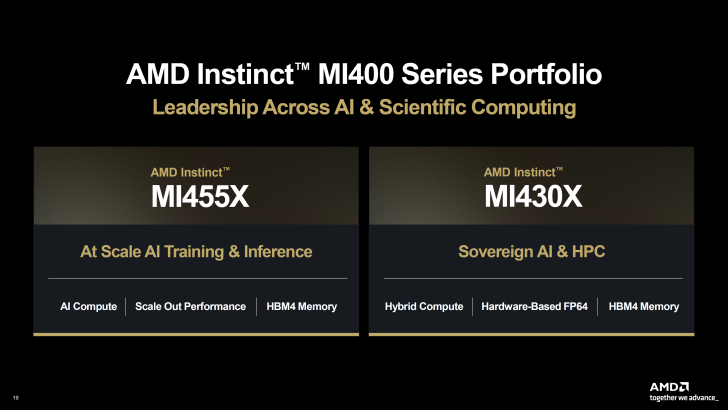

MI455X and MI430X: two flavors of MI400 for different buyers

Within the MI400 family AMD is carving out two distinct products. The Instinct MI455X is the top-end configuration aimed squarely at large-scale AI training and high-throughput inference. This is the card hyperscalers will be eyeing when they want to pack as many large language models as possible into a single rack while still leaving room for future model growth. Paired with 432 GB of HBM4 and the full CDNA 5 feature set, MI455X is clearly designed to sit in the same conversation as NVIDIA’s Vera Rubin accelerators when customers are drawing up next-generation cluster designs.

The Instinct MI430X, by contrast, is tuned for classic HPC and so-called sovereign AI workloads. It retains the same HBM4 memory footprint as MI455X but leans harder into FP64 performance and tight CPU plus GPU integration. Governments, research labs and regulated industries that want local AI capacity under their own legal and physical control are exactly the buyers AMD is courting here. The company is positioning MI430X as a building block for supercomputers where climate models, physics simulations and national-scale language models share the same underlying hardware, with EPYC CPUs and CDNA 5 GPUs working in lockstep.

Taking on NVIDIA’s Vera Rubin: more than a FLOP fight

On paper, AMD is clearly confident about where MI400 lands relative to NVIDIA’s Vera Rubin platform. Company material highlights around 1.5 times the memory capacity of competing accelerators, broadly similar memory bandwidth, parity in FP4 and FP8 throughput, comparable scale-up bandwidth inside a node and roughly 1.5 times the scale-out bandwidth between GPUs. Those numbers, if they hold up in shipping silicon, would erase many of the obvious spec-sheet excuses customers currently have for staying entirely in the green camp.

But veterans of the AI hardware space know that spec sheets do not train models, full systems and software stacks do. That is where NVIDIA has been running laps around everyone, not just with GPUs but with networking, reference system designs and an end-to-end software platform. One popular criticism you hear from engineers is that it is not about having the single fastest GPU, it is about proving you can scale to AI factories with thousands of accelerators working together. Jensen Huang’s company has already done that repeatedly. AMD is now trying to answer that challenge, arguing that MI400 plus HBM4 plus standard interconnects and a rapidly improving ROCm software stack can provide similar scale without locking customers into a single vendor’s ecosystem.

MI500 in 2027: the next leap after MI400

If MI400 is AMD’s bid to be taken seriously next year, then the Instinct MI500 family is the bigger swing that could redefine the conversation in 2027. Official details are still light, but the company is already talking about a next-generation CDNA Next or UDNA architecture, a new process node and major jumps in compute density, memory technology and interconnect bandwidth. The MI500 line is meant to power fully next-gen AI racks rather than simply dropping into existing designs, with packaging and system-level integration pushing further than what MI400 can reasonably deliver.

Fans are already speculating that MI500, and potential intermediate parts like a hypothetical MI450X, could leverage more advanced process nodes than NVIDIA’s 3 nm designs, giving AMD a transistor-density and efficiency edge if manufacturing lines up. That is admittedly early-days optimism, but it shows how quickly sentiment has shifted. A few years ago the idea of AMD leading in any high-end AI metric would have sounded like wishful thinking. Now, with MI350 already on the market and MI400 and MI500 publicly road-mapped, NVIDIA’s seemingly unassailable lead looks at least challengeable.

What about gamers watching all this AI madness?

While data-center investors and AI researchers cheer every new Instinct slide, a very different crowd is watching with mixed feelings: PC gamers. Many of them see AMD chief executive Lisa Su pushing ever harder into AI and worry there is nothing left in the tank for Radeon gaming cards. From their point of view, wafers and engineering effort that could have gone into new desktop GPUs are now being swallowed by MI400 and MI500 accelerators for cloud giants and national labs.

The reality is more nuanced. There is no question that right now AI accelerators are where the big margins are, and both AMD and NVIDIA are prioritizing those parts accordingly. But the technologies being developed for Instinct products do not live in a vacuum. HBM packaging, power delivery tricks, new cache hierarchies and advanced interconnects are all innovations that can later trickle down into gaming and workstation GPUs. AMD has already hinted at future gaming architectures coinciding with its broader Zen and GPU roadmaps around 2027, so while gamers may feel ignored in the short term, they are unlikely to be abandoned entirely. In the meantime the AI boom is simply paying the bills.

Is this an AI bubble or the new normal?

Inevitably, any discussion of multi-petaFLOP accelerators and multi-billion-dollar data-center budgets runs into the same question: is this an unsustainable AI bubble? Some of the loudest voices who spent the last two years mocking the hype are now tracking every new GPU leak and roadmap slide. Even among fans there is a running joke that if a bubble does pop, it might hit NVIDIA hardest because the company has become so tightly associated with the AI trade, while AMD still has a more diversified portfolio across consoles, PCs, embedded and server CPUs.

Whatever your view, AMD clearly does not believe the demand is a temporary blip. You do not commit to a yearly accelerator cadence, multi-generation roadmaps and massive investments in HBM4 and next-gen interconnects if you think the market is about to vanish. What does seem inevitable is that customers will demand more choice and less dependency on a single vendor with near-monopoly pricing power. That is exactly the gap MI400 and MI500 are designed to fill, even if the road to meaningful market share will be long and sometimes brutal.

Bottom line: slides today, real competition tomorrow

Right now MI400 and MI500 live mostly as roadmaps, performance targets and carefully chosen bar charts. The hard work begins when real silicon lands in labs and data centers and has to prove itself against NVIDIA’s mature hardware and software ecosystem. AMD must show that Instinct accelerators can deliver not just attractive peak FLOPs but stable, scalable clusters, robust software support and predictable supply at the kind of scale hyperscalers now require.

If the company can hit those marks, the payoff is huge. More memory-rich accelerators like MI455X and MI430X would give customers genuine architectural choice instead of defaulting to whatever NVIDIA ships next. MI500 could then push that competition further, potentially shaking up pricing, contract terms and the balance of power in high-end AI. In other words, the real story of MI400 and MI500 is not just about one vendor catching another on a spec sheet. It is about whether the AI era becomes a one-company show or a competitive market where innovation is driven by multiple players fighting for every rack. AMD has finally stepped into the ring; the next two years will show whether it can land more than a few good jabs.

2 comments

ngreedia must be sweating a bit now, 432GB HBM4 on MI455X looks insane on paper 😂

Everyone screamed AI bubble last year and now we are here arguing about which 40 PFLOP GPU to buy, guess the bubble only pops for my wallet 😅