Intel is finally making a decisive move to regain ground in the rapidly evolving AI hardware race. After years of uncertain strategy and lagging behind rivals, the company has announced a bold new plan: an annual AI product cadence that will see new architectures released every twelve months. This announcement, made during Intel’s Tech Tour, signals a turning point for the chipmaker as it attempts to reposition itself at the center of the AI revolution dominated by NVIDIA and AMD.

For years, Intel’s AI roadmap has been somewhat of a puzzle – ambitious in words, but often short on execution.

With markets booming for inference chips and cloud-scale accelerators, the company’s scattered approach left it struggling to keep up with competitors who’ve already built extensive ecosystems. Now, Intel seems ready to act decisively. Chief Technology Officer Sachin Katti took the stage to share what could become the company’s most important architectural shift in recent memory.

According to Katti, Intel’s immediate focus is on an inference-optimized GPU designed to deliver high performance for enterprise workloads and AI inference tasks. The product is expected to be formally unveiled later this year at the Open Compute Project (OCP) summit. Early hints suggest that this GPU will feature enhanced memory bandwidth, expanded capacity, and scalability suited for both data centers and cloud-based AI applications. Most notably, Katti emphasized that while hardware performance evolves, the software abstraction layer will remain consistent, ensuring developers can transition smoothly between generations – a vital aspect in fostering ecosystem stability.

Intel’s upcoming AI architecture, codenamed Jaguar Shores (JGS), will serve as the backbone of this strategy. Originally, the company had planned to push its Falcon Shores lineup, but those plans were scrapped to consolidate efforts around JGS. Insiders describe Jaguar Shores as a massive, multi-domain solution integrating HBM4 memory and a range of IPs at the rack scale – an indication that Intel is targeting cloud hyperscalers and large enterprise clients rather than just niche AI enthusiasts.

While details remain under wraps, the new inference-optimized chip is believed to bridge Intel’s consumer GPU technology with its AI-focused lineup. Speculation points toward a combination of Battlemage architecture paired with high-capacity GDDR7 memory, an approach that could allow Intel to balance cost, efficiency, and performance for inference workloads. Industry analysts note that this is a crucial segment: real-world AI deployments rely heavily on inference acceleration, not just training performance.

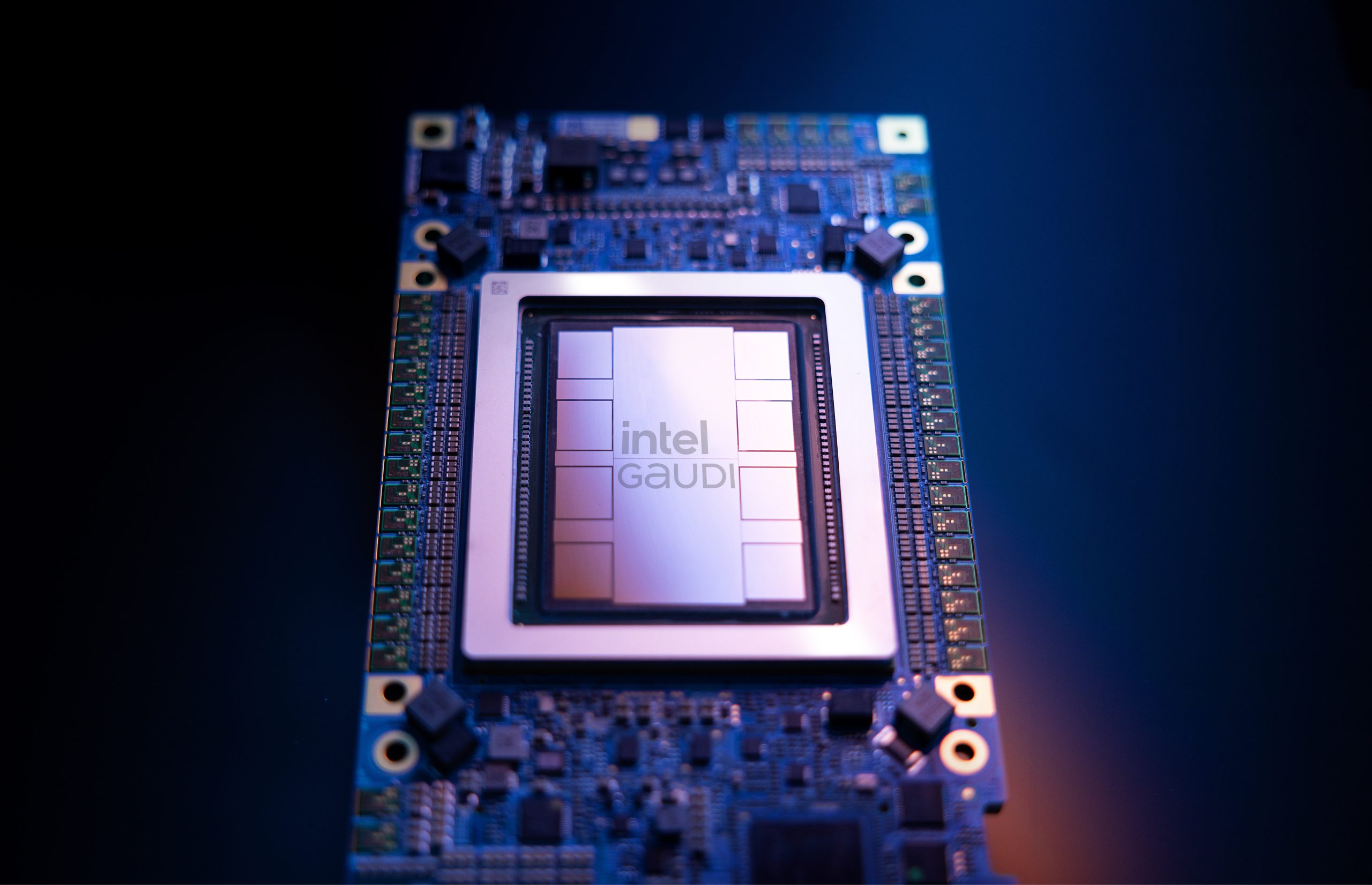

However, despite the enthusiasm, Intel faces an uphill climb. NVIDIA and AMD are comfortably ahead – not just technologically, but in market adoption and developer trust. While Intel’s Gaudi AI platform introduced promising hardware, its limited ecosystem support and sparse third-party adoption left it overshadowed. To succeed, Intel’s new cadence must deliver not just on specs but also on availability, software maturity, and long-term reliability.

Still, the tone at the event was one of renewed confidence. Intel executives spoke with conviction about bringing consistency back to product releases and ensuring customers can plan around predictable upgrade cycles. For an industry weary of delays and cancellations, that alone could rebuild trust. If Intel truly executes this new vision, 2025 might mark the start of its long-awaited comeback in AI computing – not through grandiose promises, but through the discipline of delivering, year after year.