Samsung has stepped into a new frontier of artificial intelligence evaluation by unveiling TRUEBench, a proprietary AI performance benchmark designed to address long-standing gaps in existing industry tools. For years, Samsung has been at the forefront of introducing AI features in smartphones, steadily expanding its offerings every six months. However, as the ecosystem matured, the company discovered that the tools available to measure AI progress were often limited in scope and practicality.

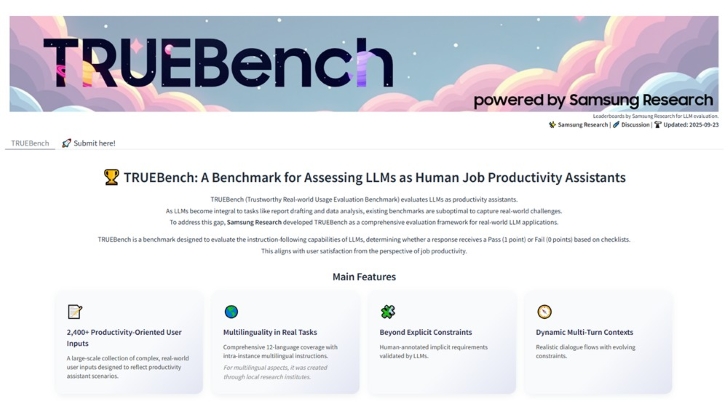

With TRUEBench, Samsung aims to provide an assessment framework that mirrors how people and businesses actually use AI in daily productivity tasks.

Why Samsung built its own benchmark

Most AI benchmarks today still focus narrowly on English-language performance and simple question-answer sessions. While these are useful, they fail to reflect the complex, multilingual, and multi-step workflows that AI now powers in workplaces and on consumer devices. Samsung Research decided to take matters into its own hands, building TRUEBench (Trustworthy Real-world Usage Evaluation Benchmark) to capture the reality of AI usage more accurately. The result is a benchmark that is not just technical, but practical, testing models in scenarios users are most likely to encounter.

A broad, real-world approach

TRUEBench goes beyond surface-level testing by incorporating 2,485 test sets spread across 10 categories, 46 sub-categories, and 12 languages. These test sets range in complexity from short inputs of just a few characters to tasks requiring over 20,000 characters of analysis, such as long-document summarization. The benchmark covers ten core enterprise-focused AI tasks, including content generation, translation, data analysis, and text summarization. In doing so, Samsung ensures the tool is versatile enough for both consumer-facing apps and business-critical AI solutions.

The company also highlights that TRUEBench places heavy emphasis on multilingualism, a feature often overlooked by conventional benchmarks. Given the global nature of Samsung’s user base, this multilingual support ensures that AI models are judged not only on English proficiency but also on their ability to handle diverse linguistic contexts.

How scoring and evaluation works

One of TRUEBench’s standout features is its AI-powered automatic evaluation system. Designed in collaboration between human experts and machine intelligence, this system is claimed to provide consistent and trustworthy scoring. The benchmark doesn’t just grade performance – it also delivers a structured leaderboard where developers, researchers, and companies can compare results transparently. By hosting its data samples and leaderboards on Hugging Face, Samsung has ensured accessibility to the global AI community, allowing developers to test and compare up to five AI models side by side.

Samsung’s vision for AI leadership

Paul (Kyungwhoon) Cheun, CTO of Samsung’s DX Division and head of Samsung Research, described TRUEBench as more than just an internal tool. He emphasized that Samsung intends it to become a standard for evaluating AI productivity. According to Cheun, Samsung’s expertise in real-world AI applications gives it a unique edge in defining performance metrics that matter. By positioning TRUEBench as both rigorous and widely available, Samsung hopes to not only showcase its own leadership but also influence industry standards for AI evaluation.

As AI continues to shape smartphones, smart devices, and enterprise systems, benchmarks like TRUEBench may become essential for helping users and businesses understand which models are truly effective in real-world conditions. Rather than relying on narrow or artificial tests, Samsung’s initiative reflects a growing demand for transparency and trustworthiness in how AI performance is measured.

2 comments

multilingual tests are def a win, english-only stuff is outdated

so samsung makes a test for their own AI… convenient lol