Arm Lumex CSS: A turnkey blueprint for customizable 3nm chipsets

Arm has drawn a bold new line in the sand for mobile silicon with Lumex, a compute subsystem (CSS) designed as a production-ready blueprint for 3nm nodes across multiple foundries. Rather than shipping chips, Arm is packaging proven implementations of the messy, time-consuming parts of a modern system-on-chip and letting partners focus on where it matters most: the CPU and GPU clusters, performance tuning, and product-specific differentiation. The promise is simple but ambitious – faster time to market, fewer integration risks, and tangible gains in performance and efficiency for everything from flagship phones to tiny wearables.

Historically, companies like MediaTek and Samsung licensed Arm CPU and GPU IP blocks and then stitched together their own SoCs. That approach remains, but Lumex CSS turns the process into a turnkey flow. Partners can pick from Arm’s latest CPU and GPU families, drop them into a pre-validated subsystem, and scale the design to fit their performance, area, and power envelope – without reinventing the glue logic or chasing corner-case bugs across memory, interconnect, and cache.

What Lumex CSS actually is (and what it isn’t)

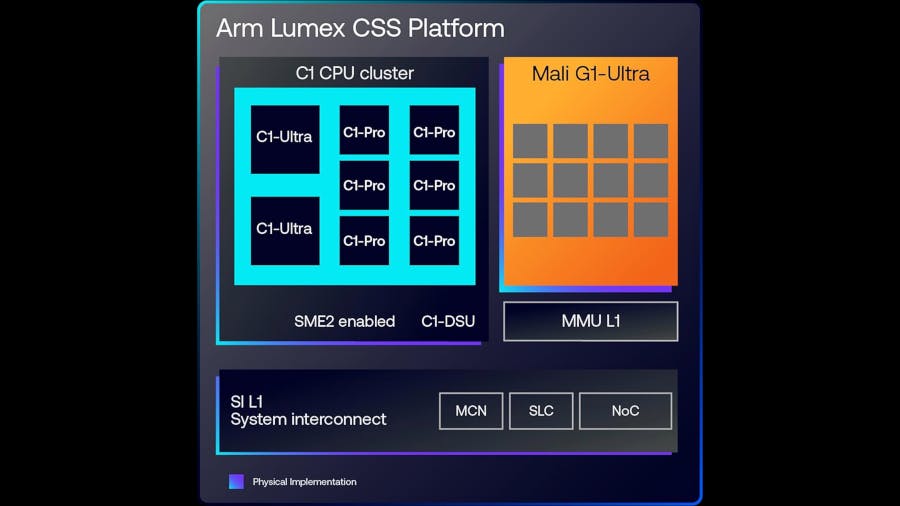

Arm isn’t entering the merchant silicon business. Instead, it is providing production-ready implementations that map to 3nm processes from multiple foundries. In Arm’s framing, its silicon and OEM partners can treat Lumex as a set of flexible building blocks. The knobs and dials are plentiful: CPU cluster composition, GPU shader counts, system-level cache sizing, and security/virtualization features – all within a cohesive architecture that’s already been floor-planned and power-optimized.

The CPU menu: C1 family for every class of device

The new C1 core family underpins Lumex and spans four distinct profiles, each aimed at a different slice of the market:

- C1-Ultra – Built for peak performance. Arm cites roughly +25% single-thread performance and double-digit year-on-year IPC gains, ideal for large-model inference, computational photography, content creation, and on-device generative AI.

- C1-Premium – Delivers near-Ultra performance but with better silicon density: about 35% smaller area than C1-Ultra, targeting upper-mainstream phones and heavy multitasking.

- C1-Pro – Tuned for sustained efficiency with around +16% sustained performance, a sweet spot for long video playback and continuous streaming inference.

- C1-Nano – The extreme efficiency option, delivering about +26% efficiency in a tiny footprint for wearables and the smallest form factors.

Thanks to the new C1-DSU (DynamIQ Shared Unit), partners can build clusters from 1 to 14 CPU cores, mixing up to three core types per design. That means a flagship can combine several C1-Ultra cores for bursty responsiveness, a pack of C1-Premium for throughput, and a few C1-Pro or C1-Nano cores for background tasks that sip power.

Mali-G1 GPUs: scaling from lean to ludicrous

On the graphics side, the Mali-G1 family scales from a minimalist 1 shader configuration all the way to 24 shaders, enabling everything from budget devices to GPU-heavy premium phones and tablets. At the top end, the Mali-G1 Ultra outpaces Arm’s previous generation with ~20% faster rasterization and 2× the ray-tracing performance compared with Immortalis-G925. That uplift lands right where users feel it most: smoother high-refresh gaming, faster rendering in creative apps, and more believable lighting in ray-traced titles.

The “secret sauce”: Interconnect and memory, re-engineered

Arm’s less glamorous but critical innovation sits in the substrate: a new System Interconnect L1 that hosts the SoC’s system-level cache (SLC). By redesigning this SLC, Arm claims about a 71% leakage reduction versus standard RAM designs – meaning far lower idle power and longer standby life. Alongside it, the new MMU L1 enables secure, cost-efficient virtualization, opening the door to running multiple OS instances or sandboxed environments on the same device without blowing the power budget.

Real-world deltas: faster where it counts, thriftier everywhere else

Arm reports that a C1 CPU compute cluster delivers an average ~30% higher performance across six industry benchmarks versus previous designs. For popular, bursty workloads – gaming and video streaming – Arm quotes ~15% faster outcomes. For daily-driver tasks such as video playback, web browsing, and social apps, the cluster is roughly 12% more efficient. Those figures may vary by OEM tuning, but they give a directional sense of what Lumex-based designs can achieve.

Zooming in on the absolute top end, C1-Ultra shows double-digit IPC gains against the already-fast Cortex-X925, while Mali-G1 Ultra widens the graphics lead in both classic and ray-traced pipelines. The net: snappier interfaces, steadier frame times, and less thermal throttling under load.

SME2 and the on-device AI wave

At the heart of Arm’s AI push is the Scalable Matrix Extension 2 (SME2). With SME2, the new CPUs can be up to 5× faster for certain AI workloads and up to 3× more efficient than prior designs. Pair that with the Mali-G1 GPU’s ~20% inference uplift, and you have a platform built for on-device generative models, advanced camera pipelines, and private, low-latency assistants. Google’s Android team has highlighted that SME2-equipped hardware can run more capable models (think the likes of Gemma 3) directly on devices, making next-gen AI features broadly deployable without pinging the cloud for every request.

What partners are saying

Major OEMs are already aligning. Samsung talks about using Arm’s compute subsystem to power its next wave of flagship mobile products, with a clear emphasis on pushing on-device AI further. Honor underscores how Lumex CSS helps bring premium-feeling performance and efficiency to upper-mid devices – where budget and thermals are tighter. And from Google’s perspective, SME2’s momentum means Android developers can ship richer AI experiences that run locally, cutting latency and improving privacy.

Why this matters to users

For everyday users, Lumex-based designs should translate into longer battery life in idle and mixed use, fewer slowdowns during app switching, faster photo and video processing, and gaming that holds its frame rate without cooking the palm of your hand. The granular CPU mix enabled by C1-DSU lets a phone wake instantly for a voice command on a tiny core, then surge to C1-Ultra for a burst of AI-driven photo edits, all while the interconnect and SLC keep data flowing efficiently.

Scaling beyond phones

Because C1-Nano emphasizes ultra-low power in a compact area, the same architecture scales into wearables and other small form factors. At the other end of the spectrum, higher-shader Mali-G1 configurations and larger CPU clusters can target tablets or lightweight laptops that want instant-on responsiveness with mobile-class battery life.

Bottom line

Lumex CSS isn’t a single chip; it’s a shortcut to better chips. By shipping a robust, pre-verified subsystem for 3nm nodes – and pairing it with a flexible menu of C1 CPUs and Mali-G1 GPUs – Arm is smoothing the path from whiteboard to wafer. The early numbers point to meaningful gains: double-digit CPU IPC improvements at the top, 2× ray tracing on the GPU, big strides in AI throughput with SME2, and deep idle-power cuts via a smarter interconnect and SLC. For partners, that’s less integration drag; for users, it’s faster, cooler devices that do more on-device. As AI continues to move from the cloud into our pockets and onto our wrists, Lumex looks like a timely – and very pragmatic – reset of the mobile SoC playbook.

3 comments

Virtualization on phones is wild… dual-boot Android weekend project incoming?

SME2 + Gemma 3 on-device is the real W. No cloud lag

C1-Nano wearables bout to last more than a day… hopefully 🤞