NVIDIA Pushes Back on Custom AI Chip Threat, Eyes Billions From China and Gigawatt-Scale Demand for Vera Rubin

NVIDIA’s financial chief, Colette Kress, took the stage at the Goldman Sachs Communacopia + Technology Conference with a clear message: the company is not worried about rivals chasing custom AI chips, and it expects staggering demand for its next-generation Vera Rubin processors. Her remarks also shed light on the complex geopolitical hurdles around selling its H20 AI GPUs in China, which could represent up to $5 billion in revenue if political tensions allow.

According to Kress, NVIDIA’s data center and networking business continues to surge, even after removing China-specific H20 sales from its accounting. The company posted 12% quarter-over-quarter growth in Q2 and is guiding for 17% sequential growth in Q3. Those numbers highlight not just steady demand but accelerating adoption of NVIDIA’s AI infrastructure at scale.

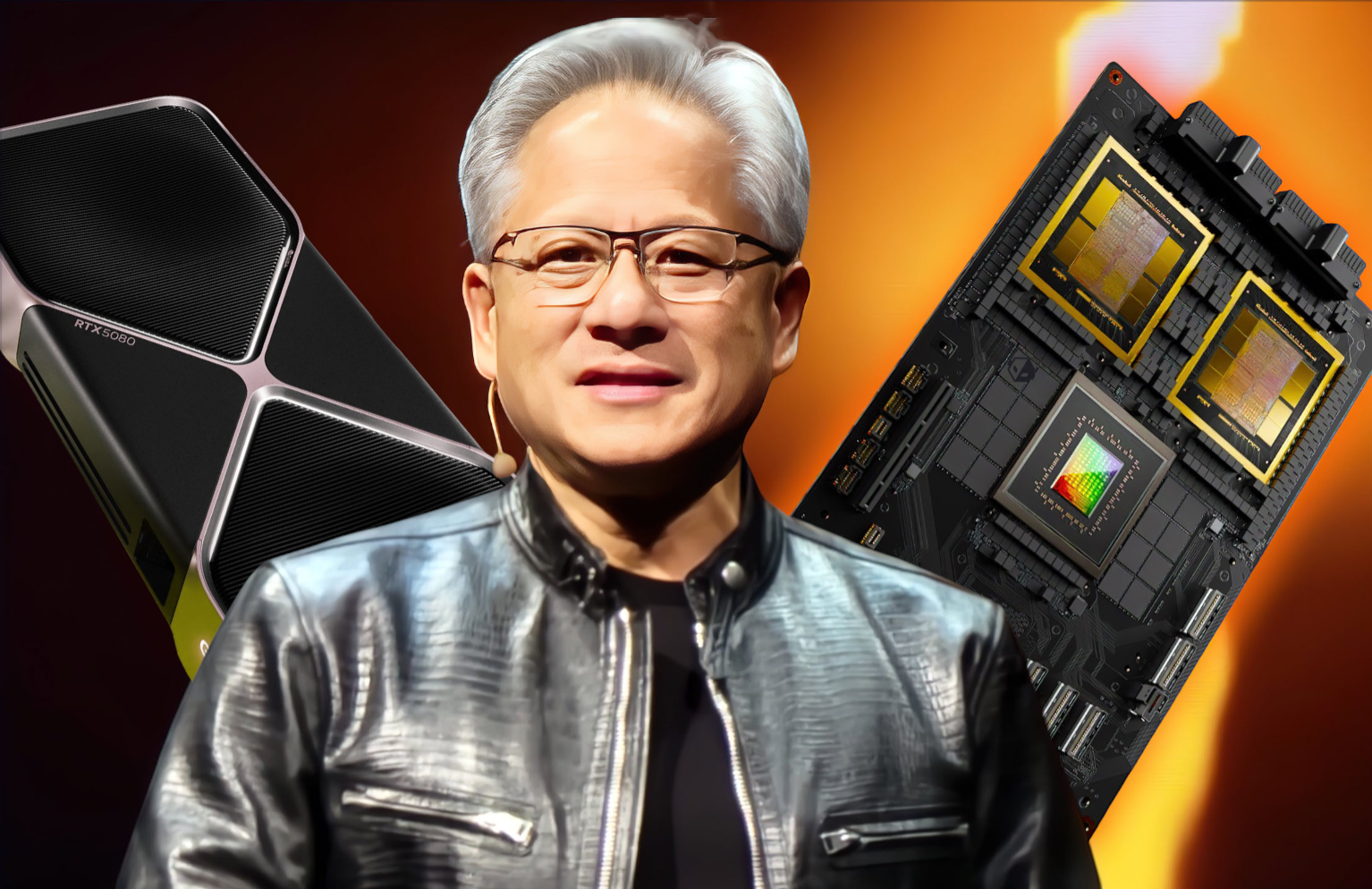

One of the surprises Kress emphasized was the rapid scaling of NVIDIA’s GB200 and GB300 racks. She noted that while industry chatter underestimated the impact of the GB300 Ultra, NVIDIA executed a seamless transition, achieving far higher scale and volume than many observers anticipated. In fact, market reports suggest that NVIDIA’s GB300 segment could post 300% sequential growth in Q3. The company now expects to deepen its scale-up of both GB200 and GB300 racks in the months ahead.

The most politically sensitive topic, however, remains China. Kress confirmed that NVIDIA has secured licenses under the Trump-era framework to ship H20 GPUs to Chinese customers. Yet she admitted that sales depend on navigating an unstable geopolitical landscape. “There is still a little geopolitical situation that we need to work through between the two governments,” she said, adding that Chinese clients want reassurance that their government will endorse the purchases. If shipments proceed, revenue from H20 sales could add between $2 billion and $5 billion to NVIDIA’s books, a swing that underscores just how significant the China market remains.

At the same time, Beijing has been signaling that local firms should prioritize domestic chips over NVIDIA’s offerings. Despite this pressure, NVIDIA remains optimistic. Kress stressed that her company believes there is still a “strong possibility” of completing these shipments. That confidence, however, hasn’t been enough to shield NVIDIA’s stock, which recently slipped following Broadcom’s announcement of a $10 billion custom AI chip deal. The move reignited debate over whether hyperscalers will increasingly opt for cost-efficient custom silicon rather than NVIDIA’s standardized, but premium-priced, GPUs.

Kress dismissed the idea that custom AI chips represent an existential threat. She argued that the core of AI computing isn’t simply about cheap silicon but about sustainable power and performance. As she explained, inference-heavy reasoning models and agentic AI systems require immense throughput, and only data center–scale infrastructure can deliver the reliability and efficiency these workloads demand. “You have to remember,” she said, “owning these clusters is a four-to-six-year journey. You’re not just buying chips; you’re buying into continuous power draw, optimization, and long-term performance per watt and per dollar.”

This argument puts NVIDIA’s strategy in sharp relief: rather than chase down every niche custom contract, the company wants to dominate the market for turnkey, integrated racks that offer unmatched efficiency. By designing rack-scale solutions where all chips and networking components are optimized together, NVIDIA believes it can outpace bespoke competitors whose offerings may appear cheaper up front but struggle to deliver holistic efficiency over the lifetime of a cluster.

Attention then shifted to the Vera Rubin generation of AI GPUs. Kress revealed that all six Rubin chips have already been taped out and are on schedule for a one-year launch cadence. More striking was her claim that, even before launch, data center operators are penciling in “gigawatts” of demand. The figure reflects not only how central Rubin is expected to become in large-scale AI training and inference but also the growing recognition that compute power is becoming as strategic a resource as capital itself. “We’ve already had discussions where we probably will see several gigawatts needs for our Vera Rubin,” Kress explained, underscoring the enormous energy footprint that next-generation AI will require.

Rubin’s design will build on NVIDIA’s experience with GB300 rack deployments, integrating GPUs, networking, and software in a tightly optimized package. The company’s roadmap envisions delivering record-breaking performance while squeezing every possible advantage out of power efficiency. This is particularly critical as hyperscalers and enterprise AI developers confront the dual challenge of soaring compute needs and skyrocketing electricity costs. With power grids under strain and governments scrutinizing energy consumption, NVIDIA wants to position Rubin as the gold standard in performance per watt.

The wider industry context only makes NVIDIA’s posture more significant. While Broadcom’s massive custom chip contract raised eyebrows, NVIDIA is betting that customers won’t abandon its ecosystem when their future AI success depends on scaling workloads safely and efficiently. In this sense, Rubin represents not just another product cycle but a statement that NVIDIA intends to define the shape of AI infrastructure for years to come.

Kress’s comments highlight a company balancing immediate political risk with long-term technological dominance. On the one hand, billions in revenue hinge on whether Washington and Beijing allow H20 shipments to proceed. On the other, Vera Rubin has already captured interest so intense that its energy requirements are being planned years in advance. Between these poles, NVIDIA is navigating investor skepticism, competitive jabs, and global supply chain politics with the confidence of a company that knows its technology is still the benchmark by which all others are measured.

For all the uncertainty, one theme was consistent in Kress’s remarks: NVIDIA is not in retreat. It is doubling down on scale, efficiency, and innovation. Whether through billions in potential China revenue, rack-scale dominance, or gigawatt-level demand for Rubin, NVIDIA’s strategy is to make itself indispensable in an AI economy where performance and power are the ultimate currencies.

2 comments

bro even if it’s 5B from China, all politics could flip in a day… risky bet

tbh Broadcom custom chips might steal some of that thunder, but NVIDIA still king