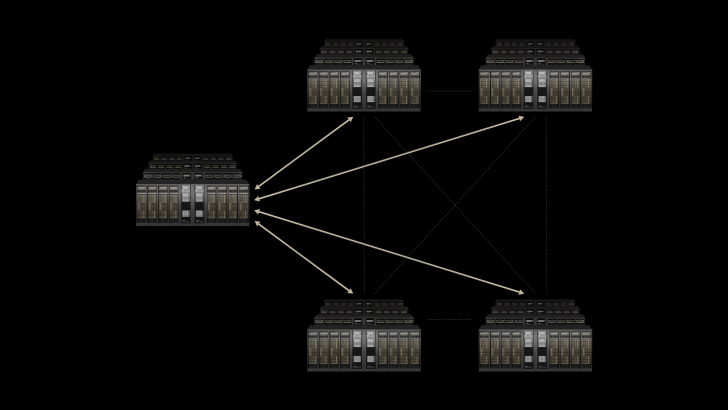

NVIDIA is rewriting the rules of AI infrastructure with its new Spectrum-XGS technology, designed to stitch together multiple datacenters into one massive ‘giga-cluster.’ Instead of endlessly pushing the boundaries of a single datacenter – where power, cooling, and geography all become crippling barriers – NVIDIA’s approach interconnects entire facilities into a seamless compute fabric.

According to CEO Jensen Huang, Spectrum-XGS builds on the company’s Spectrum Ethernet platform and brings ‘scale-across’ to the existing scale-up and scale-out models.

The result is the ability to link datacenters across cities, nations, or even continents, forming AI super-factories on a scale previously unimaginable.

Performance is at the heart of the system. NVIDIA claims Spectrum-XGS doubles the efficiency compared to NCCL, its prior GPU interconnect solution. Key to this leap are advanced features like automatic distance congestion control and latency management, ensuring long-distance links between datacenters don’t turn into bottlenecks.

The first hyperscaler to embrace Spectrum-XGS is Coreweave, a move NVIDIA says will ‘accelerate breakthroughs across every industry.’ By unifying resources instead of simply stacking them higher, this model hints at a future where AI computing won’t be constrained by the walls of a single server farm.

The debut follows NVIDIA’s earlier foray into silicon photonics-based network switches, signaling the company’s intent to dominate not just GPUs, but the entire AI ecosystem’s connective tissue. With Spectrum-XGS, the narrative shifts from bigger datacenters to smarter, distributed ones – an architecture that could redefine the very meaning of AI scale.

1 comment

bro this is literally 1.21 jiggawatts moment 😂